Sat-Sun: Non-working days

Abstract

This article analyzes the essence and significance of spatial resolution, its impact, and prospects in the modern world of information technologies and scientific research. The spatial resolution of drones, radar satellites, and LiDAR imaging is also described.

Introduction

Spatial resolution (pixel size) is a parameter associated with the pixel size—the area on the Earth's surface covered by one pixel. For example, if an area is covered by a pixel measuring 1 by 1 meter, the spatial resolution will be 1 meter. The higher the resolution of an image, the smaller the pixel size, and the greater the detail.

The spatial resolution of an aerospace image is a measure that characterizes the size of the smallest objects distinguishable in the image. Simply put, the spatial resolution of an aerospace image is the size of one pixel, i.e., the smallest point distinguishable by a satellite sensor.

The "spatial resolution" metric is suitable for assessing the size of the smallest object (or its individual detail) that can be depicted in the image. The "resolving power" metric, on the other hand, is more appropriate for evaluating the ability of the image to distinctly convey closely spaced linear objects.

The Relationship Between Spatial Resolution and Images, Maps, Data Models, and Other Spatial Representations

Spatial resolution plays a crucial role in various domains related to spatial representation, such as images, maps, data models, and other forms of visualization.

In digital photography and visual processing, spatial resolution determines the level of detail in an image. Higher resolution results in sharper and more detailed images.

In cartography, spatial resolution is essential for creating detailed maps. The higher the spatial resolution, the more accurately geographical features, locations, and topography are represented. Additionally, the relationship between spatial resolution and GIS (Geographic Information Systems) involves defining the precision of spatial analyses.

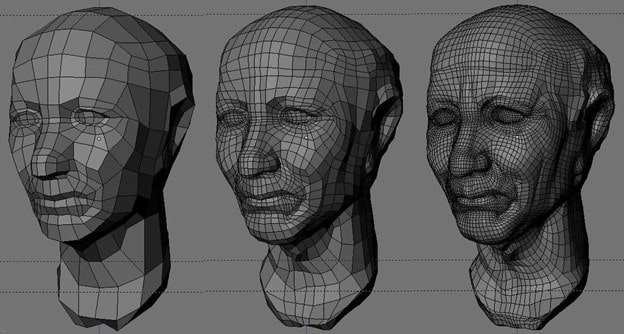

In 3D modeling, spatial resolution plays a crucial role in determining the level of detail of three-dimensional objects. Higher resolution results in more accurate and realistic models.

In geographic information systems, the spatial resolution of data limits the accuracy and spatial annotation of geodata, such as the location of points of interest, territorial boundaries, and more.

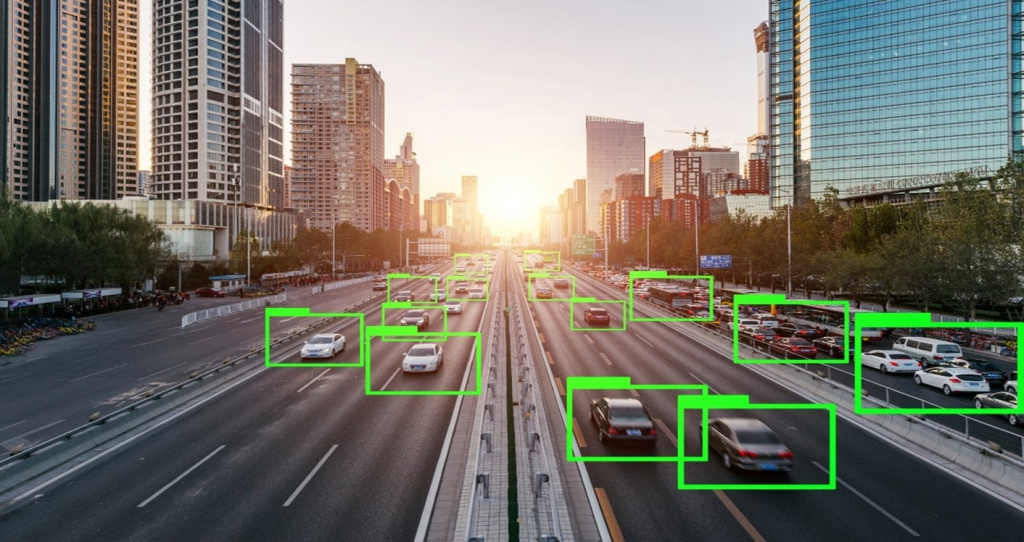

In computer vision, high spatial resolution is critical. For instance, traffic security cameras must have high spatial resolution to recognize vehicles and their license plates accurately. Otherwise, situations may arise where a camera incorrectly identifies a license plate, resulting in an honest driver receiving a fine for speeding while the actual violator goes unpunished.

Pixel Size of Raster Data and Scale

The spatial resolution of remote sensing images depends on several key factors. First and foremost are the optical characteristics of the sensor used on the satellite or aircraft. The main parameters include the pixel size on the detector matrix and the focal length of the optical system. Smaller pixel sizes and longer focal lengths result in higher spatial resolution, enabling the identification of smaller objects on Earth's surface. Additionally, satellite orbital parameters, such as the altitude of the flight above the Earth's surface, significantly influence resolution: lower orbits yield higher resolution because the sensor is closer to Earth.

Another important factor is the scanning method used by the sensor. For passive sensors utilizing solar radiation, spatial resolution also depends on lighting conditions: better illumination ensures sharper images. Active sensors, such as radars, have unique characteristics: resolution can depend on beam width, signal frequency, and viewing angle. Lastly, the frequency and width of spectral channels also play a role: sensors operating in narrow spectral bands may offer higher resolution, but often require compromises between spatial and spectral resolution.

Images where pixels correspond to large surface areas are referred to as low-resolution images, as they contain fewer details.

When working with high-resolution data, storage requirements should be considered. Reducing pixel size significantly increases storage needs.

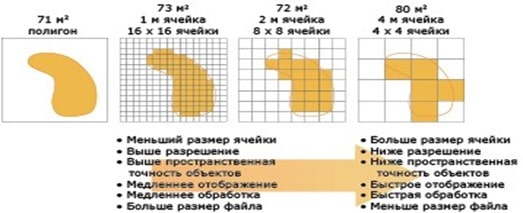

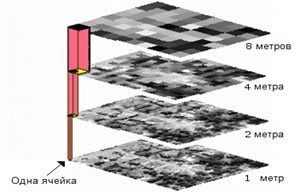

The level of detail represented in an image depends on pixel size. Pixels must be small enough to describe objects of the required size in detail, but large enough to avoid overburdening storage and computational performance during analysis. Smaller pixels can represent more ground objects or smaller object details but result in larger raster datasets that require more storage and longer processing times.

When selecting an appropriate pixel size, it is essential to consider the minimum mapping unit for ground objects to be analyzed and the requirements for display speed, processing time, and storage capacity.

In GIS, when working with a classified dataset derived from satellite images with a resolution of 30 meters, using a digital elevation model (DEM) or auxiliary data with higher resolution, such as 10 meters, may be unnecessary. The more homogeneous the area of critical variables, such as topography and land cover, the larger the cell size can be without sacrificing accuracy.

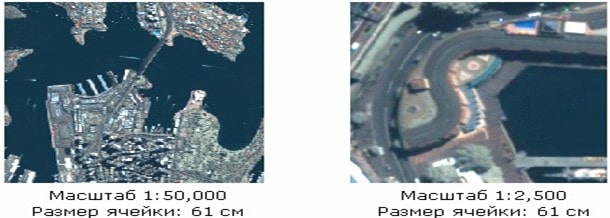

It can be concluded that the higher the image resolution, the smaller the pixel size, and the greater the detail. This is the opposite of scale: the smaller the scale, the less the detail. For example, an orthophoto at a scale of 1:2 provides more details than the same image at a scale of 1:25,000. However, if the pixel size of this orthophoto is 5 meters, the resolution remains unchanged since the physical pixel size does not change.

The following figure demonstrates that the scale of the first image (1:50,000) is smaller than the scale of the second image (1:25,000), although the spatial resolution of the data remains the same.

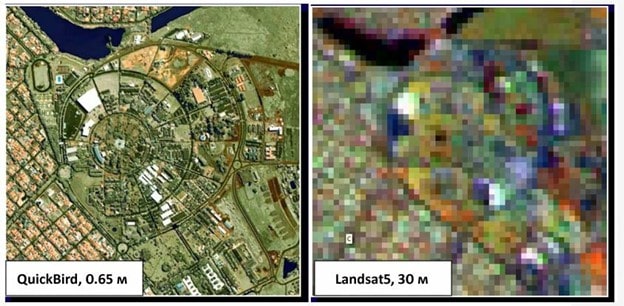

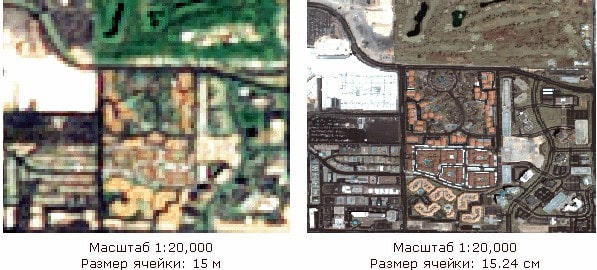

The next figure shows two images displayed at the same scale. However, the first image has lower spatial resolution than the second image.

When selecting pixel size, consider the following factors:

- Spatial resolution of input data;

- The application domain and analysis to be performed based on the minimum mapping unit;

- Final database size relative to available storage space.

Spatial resolution in remote sensing refers to the capability of sensor systems, such as satellites or aerial photography, to distinguish objects of a certain size on Earth's surface. Typically, spatial resolution in remote sensing is classified based on the diameter of the minimum discernible element (MDE), measured in meters on the ground for a given observation system.

- Low-resolution images usually have an MDE of over 30 meters. These are generally unsuitable for detailed study of individual objects but are useful for analyzing large-scale natural phenomena or changes over vast areas.

- Medium-resolution images typically have an MDE ranging from 5 to 30 meters. These are often used for cartographic and geographic research, as well as for monitoring medium-scale objects and changes.

- High-resolution images provide MDEs ranging from 1 to 5 meters. These are ideal for studying urban areas, infrastructure, land use analysis, large object management, and other applications requiring detailed information.

- Very high-resolution systems provide images with an MDE of less than 1 meter. These images deliver very detailed information about small objects and are commonly used for urban planning, cartography, military, and specialized research purposes.

The classification of spatial resolution in remote sensing is directly related to the capability to image remote objects, detail the obtained information, and the research or application purposes they can serve.

Spatial Resolution of Radar Satellites

Radar observation satellites utilize Synthetic Aperture Radar (SAR) technology. Globally, increasing attention is being directed toward developing SAR systems and software for generating radar images (RI) with high (1 meter or more) and ultra-high (less than 1 meter) resolution.

Tasks/Object Name |

Detection, m |

Recognition, m (general/precise) |

Detailed Description, m |

General Remote Sensing Tasks |

|||

Ice Formation Monitoring |

--- |

1000 / 300 |

--- |

Navigation, Fishing |

300 |

30 / 15 |

--- |

Vegetation Monitoring, Forests, Land Use |

--- |

25 / --- |

2 - 5 |

Environmental Monitoring |

--- |

25 / --- |

2 - 5 |

Geological Mapping |

--- |

25 / --- |

3 |

Emergency Monitoring |

|||

Earthquakes, Eruptions |

--- |

25 / --- |

2 - 5 |

Pipeline Accidents |

--- |

--- |

2 - 5 |

Mapping |

|||

Terrain |

--- |

90 / 4.5 |

1.5 |

Settlements |

60 |

30 / 3 |

3 |

Roads |

6 - 9 |

6 / 1.8 |

0.6 |

Bridges |

6 |

4.5 / 1.5 |

1 |

Object Detection and Deciphering |

|||

Ships |

7.6 - 15 |

4.5 / 0.6 |

0.3 |

Airplanes |

4.5 |

1.5 / 1 |

0.15 |

Vehicles |

1.5 |

0.6 / 0.3 |

0.05 |

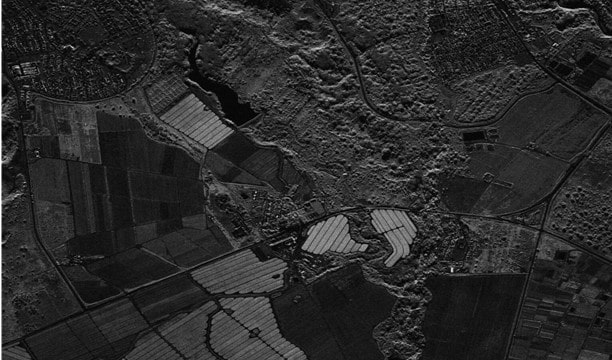

Radar images possess unique geometry differing from that of optical images. An essential characteristic of radar imaging is its non-vertical capture along an inclined line—transverse to the Earth's surface. This leads to geometric distortions, such as compression of the foreground and stretching of the background. Additionally, a phenomenon called the "shadow effect" occurs, where tall objects can block areas behind them, creating shadows on the radar image.

Resolution in radar imaging has two components: azimuthal and range. Azimuthal resolution is formed by the motion of the platform with the radar and depends on the width of the synthesized aperture, while range resolution is determined by the duration of the radar pulse. This allows radar resolution to vary based on the task. Azimuthal resolution improves with a longer flight trajectory, producing more detailed images along the satellite or aircraft's direction of motion, whereas range resolution depends on pulse width, influencing object clarity at varying distances.

On August 7, 2023, the advanced space radar technology leader Umbra announced the successful creation of an image using a Synthetic Aperture Radar (SAR) with a resolution of 16 cm—the highest-resolution commercial satellite image ever released.

Satellite |

Spatial Resolution |

TerraSAR-X-TanDEM-X |

Ultra-high (1 m and above) |

Lutan-1 A/B (L-SAR) |

Ultra-high (1 m and above) |

HiSea-1 |

Ultra-high (1 m and above) |

CSG-1 |

Ultra-high (1 m and above) |

COSMO-SkyMed |

Ultra-high (1 m and above) |

Sentinel-1A, 1B |

Medium (2.5 m to 10 m) |

KOMPSAT-5 |

Ultra-high (1 m and above) |

Gaofen-3 |

Ultra-high (1 m and above) |

ICEYE SAR |

Ultra-high (1 m and above) |

Radarsat-2 |

Medium (2.5 m to 10 m) |

Radarsat-1 |

Medium (2.5 m to 10 m) |

Asnaro-2 |

Ultra-high (1 m and above) |

ENVISAT |

Low (below 10 m) |

ERS-2 |

Low (below 10 m) |

ALOS-2 (PALSAR-2) DAICHI-2 |

Medium (2.5 m to 10 m) |

Spatial Resolution of Optical and Video Cameras on Drones and UAVs

The spatial resolution of unmanned aerial vehicles (UAVs) depends on the flight altitude, sensor characteristics, and the type of cameras mounted on the drone. The lower the flight altitude and the better the sensors, the higher the image resolution, allowing for the capture of the finest surface details. UAVs are often equipped with advanced optical and multispectral cameras, enabling the acquisition of high-resolution images — down to centimeters per pixel.

Another important factor is the type of sensors used on UAVs. For example, drones can be equipped with standard optical cameras, Lidar, or radar systems, significantly broadening their application range and increasing data accuracy. High spatial resolution data from UAVs makes them valuable for agriculture, cartography, infrastructure monitoring, and natural resource management.

Image resolution indicates the size of the image but provides little information about the size of the depicted object. Pixel resolution is essential for data exchange, storage, image display, and digital zooming.

Low spatial resolution images are files up to 1 MP in size. These images contain minimal detail and are unsuitable for printing or editing.

Medium-resolution images have quality ranging from 1 MP to 5 MP. They provide greater sharpness than low-resolution images but still lack significant detail. Images of this size are suitable for social media posts.

High-quality images range from 5 MP to 20 MP. These images feature sharpness and detail, making them suitable for printing, editing, or online publication.

Ultra-high-quality images have resolutions exceeding 20 MP. At this quality level, images exhibit incredible detail and are ideal for editing and large-format printing.

Spatial Resolution of LiDAR Imaging

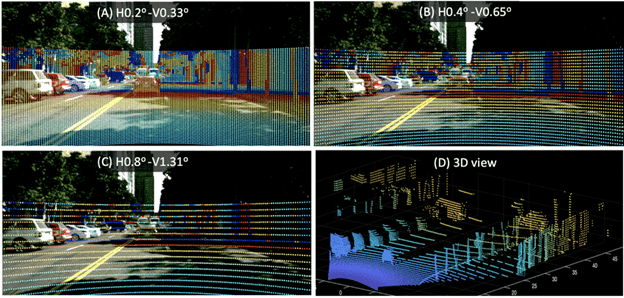

LiDAR (Light Detection and Ranging) is a technology that uses laser pulses to measure distances to objects and create highly accurate three-dimensional models. The spatial resolution of LiDAR is determined by the density of laser pulses, which can vary depending on the purpose of the survey. A higher pulse frequency provides more points per unit area, enhancing the detail and accuracy of the data. For instance, in urban object scanning, LiDAR can capture fine details of buildings and infrastructure, while in natural landscape surveys, it can detect subtle terrain changes. LiDAR uses light waves or lasers, whereas radar employs radio waves. LiDAR systems utilize light from pulsed laser beams in the near-infrared (NIR) spectrum, typically at wavelengths of 905 nm or 1550 nm. Radar systems, on the other hand, use microwaves operating at significantly longer wavelengths.

One of the key aspects of LiDAR is its vertical imaging capability, which minimizes geometric distortions compared to oblique radar imaging.

The illustration above shows different spatial resolutions of LiDAR used in modeling. Panels A-C demonstrate the sampling resolution for various horizontal and vertical resolutions (degree/sample). Panel D represents a point cloud view corresponding to Panel A. Point clouds are useful for human visualization and contain the same information as depth maps.

Unlike optical imaging, LiDAR is independent of natural lighting and can operate in low-visibility conditions such as fog, nighttime, or cloud cover. While LiDAR does not provide color information like optical cameras, it compensates with precise distance measurements, which is critical for surveying, urban modeling, infrastructure analysis, and monitoring terrain changes.

Optical systems rely on visible light and produce images similar to how the human eye perceives them, making them valuable for visual interpretation. However, LiDAR excels in obtaining precise data about the shape and structure of surfaces, making it indispensable for tasks requiring exact distance measurements and the creation of detailed 3D models.

Pansharpening

Pansharpening is a method for enhancing the spatial resolution of raster imagery. This processing technique involves synthesizing a panchromatic channel and a multichannel set of raster data.

Due to limitations in the amount of information a sensor matrix can record, the panchromatic channel typically has higher resolution than the "color" channels. The values of the panchromatic channel range from white to black, resulting in low spectral resolution but high spatial resolution. The goal of synthesis is to improve spatial resolution while preserving the properties (colors) of the synthesized multichannel image.

This processing technique is applied to enhance detail in urban areas for identifying more infrastructure objects, thus aiding their analysis. It is also used to monitor crop growth and field coverage more accurately, which helps in development planning. This method has become essential in cartography, environmental monitoring, and other fields requiring object interpretation.

Advantages of Pansharpening include:

- Combining the high spatial resolution of black-and-white imagery with multispectral information, yielding more detailed and sharper images than standalone multispectral images.

- Maintaining the spectral characteristics of multispectral images, enabling more accurate analysis and classification of objects in the imagery.

- Providing improved images that are more suitable for visual analysis and interpretation, which is beneficial for various applications like cartography and land monitoring.

However, there are some drawbacks:

- The synthesis process requires significant computational resources, especially when working with large datasets, such as high-resolution satellite imagery.

- Improper combination of different image types or the presence of noise can introduce artifacts in the resulting image, necessitating more careful control and preprocessing of input data.

- Successful synthesis requires precise alignment of multispectral and black-and-white images, which may demand specialized image processing and GIS expertise.

The spatial resolution of multispectral data is often lower than that of panchromatic data due to differences in how light is captured by sensors. Multispectral sensors capture data in several narrow spectral bands, each transmitting information about light in specific portions of the spectrum, such as visible, near-infrared, or other parts of the electromagnetic spectrum. To ensure sufficient sensitivity and image quality in each of these narrow bands, the pixel size on the detector array is often increased, reducing spatial resolution. This allows more light to be captured in each channel but at the expense of reduced image detail.

In contrast, panchromatic sensors capture light across the entire visible spectrum simultaneously, utilizing all available light information to form the image. This increases sensitivity and allows for smaller pixels on the detector. Consequently, panchromatic images have higher spatial resolution, as the detector can capture finer surface details. This approach also allows for increased focal length or reduced pixel size, further improving spatial resolution compared to multispectral data.

Super Resolution

Super Resolution is a method for enhancing the spatial resolution of satellite imagery by using machine learning algorithms to restore details lost during resolution reduction. This method produces higher-quality results but requires more time and computational resources. Super resolution enables more detailed and accurate results compared to other resolution enhancement methods like interpolation.

Interpolation is a method where new pixel values are calculated based on the values of neighboring pixels. While simple to implement and less resource-intensive, interpolation can lead to artifacts and reduced detail.

This technology is particularly useful in the field of remote sensing. For instance, original 50-centimeter imagery can be recalculated to 30-centimeter resolution, improving image sharpness and the clarity of linear objects such as roads and building edges.

The following image of Xiadiancun, China, was captured at its native 30 cm resolution — the highest spatial resolution currently available from orbiting commercial satellites.

Using HD technology on the same image, Maxar delivers a new visual experience, producing a 15-centimeter HD product (Fig. 17) that reveals finer details on building facades. Compared to the native 30-centimeter image (Fig. 16), the HD image offers a better understanding of specific features. For instance, small objects like utility covers or power lines can be identified with the 15-centimeter HD image.

Features and Benefits:

- Reduced pixelation

- Improved automatic object detection

- Reveals small details and/or features previously visible only on aerial imagery

- Enhanced vehicle identification capabilities

- Produces aesthetically improved images with sharp contours and well-reconstructed details

- HD technology does not increase resolution

- HD images contain more pixels than originally captured, reducing visible pixelation

- If an object is absent in the original image, HD technology cannot display it

- HD technology intelligently increases pixel count to maximize useful information while minimizing unnecessary noise and visible pixelation

| Product Level | HD View Ready (OR2A) & Map Ready (Ortho) |

| Image Bands | Pan and Multispectral |

| Cloud Cover | 3%, up to 20% allowed |

| Pointing Accuracy | 5 m CE90 |

| Absolute Accuracy | < 4.2 m CE90 |

| Off-Nadir Angle | < 30 degrees |

| Sun Elevation | > 30 degrees (in some areas > 15 degrees) |

| Bit Depth | 8 and 16 bits |

| Projection | UTM/WGS84 |

The resulting image features sharp lines and well-defined details, but it is important to note that HD technology does not increase resolution. Images created using HD technology have more pixels than the original, but the resolution remains unchanged. If an object is absent in the original, this technology will not render it, as it focuses solely on maximizing useful information and minimizing unnecessary noise. This method targets specific types of information in the source image and is used to distinguish details that may be unclear or hard to discern.

Tendencies and Future of Spatial Resolution

Spatial resolution in remote sensing systems continues to improve due to technological advancements and innovations in sensors, optics, and data processing. Several significant trends and prospects in this field include the following:

- The drive for obtaining more detailed images and data leads to increased spatial resolution of imagery. The development of high-resolution sensors, such as satellites with smaller pixel sizes and a greater number of detectors, enables the capture of highly detailed images. As of September 11, 2024, the launch of remote sensing satellites with spatial resolutions of 0.12–0.15 cm is scheduled by 2025.

- The combination of various types of sensors (e.g., optical, radar, and lidar) allows for the creation of multispectral and multimodal systems, enhancing the quality and informativeness of the collected data.

- The use of machine learning methods for image processing and quality enhancement improves spatial resolution by reducing noise, enhancing contrast, and sharpening images.

- The development of technologies such as CubeSats (small cube-format satellites), compact and highly maneuverable remote sensing devices, opens up new possibilities for obtaining high-resolution data in diverse conditions.

- In the future, we can anticipate enhanced capabilities of remote sensing systems in distinguishing details and characteristics of objects on the Earth's surface, which will broaden the range of applications for these technologies.